The Making of Mechasobi

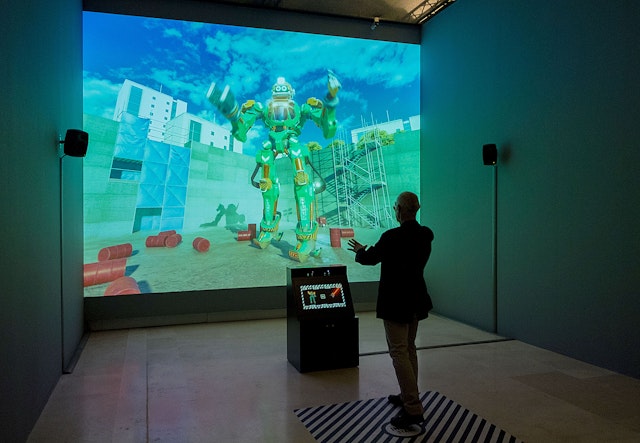

Commentary — Apr 20, 2018In the autumn of 2017, the Jody Hudson-Powell and Luke Powell team at Pentagram were commissioned by the Barbican to create an interactive digital installation for their touring exhibition – ‘Mangasia: Wonderlands of Asian Comics’.

Led by Jody Hudson-Powell and Luke Powell, the team came up with the idea of a real time 3D mech that would be motion controlled by a single user using their body movements. In order to allow for the effective mimicry of user movements it was decided that the designs would be constrained to a humanoid form.

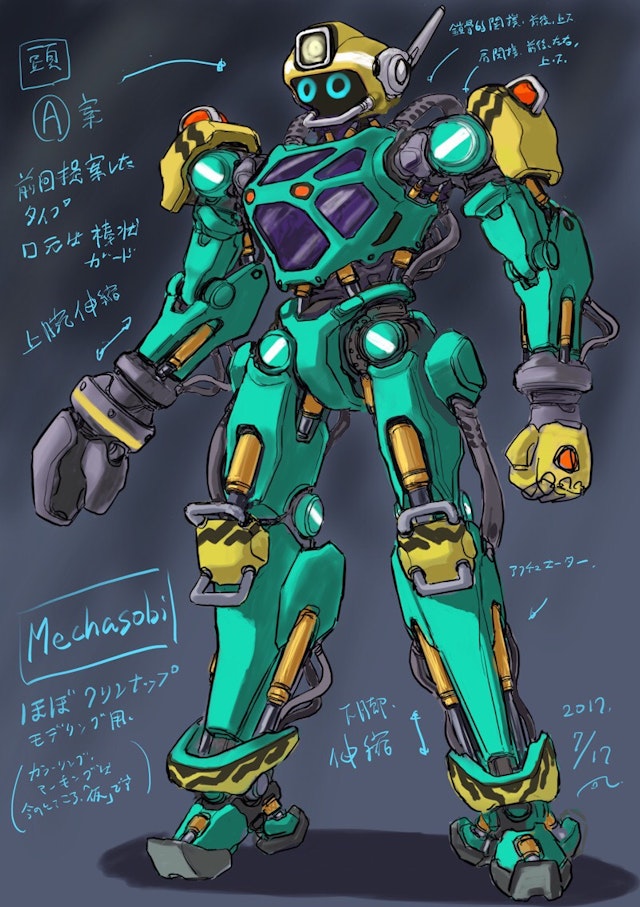

Concept Design

Imagining a not-so-distant future in which supersized robots are used for the construction of cities, a building-sized robot named ‘Mechasobi’ was created. Friendly and non-threatening at first glance, Mechasobi becomes chaotic and potentially lethal once control is turned over to the exhibition's visitors.

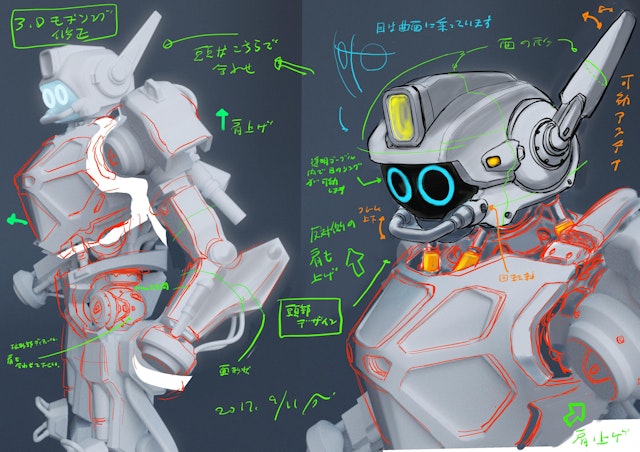

The Barbican commissioned Shoji Kawamori to design a custom mech. Working to team Hudson-Powell’s specifications, Kawamori's studio iterated and delivered 2D drawings of their design from several angles.

The team modelled a rough 3D block-out based on Kawamori's drawings.

By focusing on the forms and proportions the team were able to quickly transfer the design to 3D, while leaving room for Kawamori to critique and further iterate.

SDK Tests

The team began by testing Kinect demos in Unity.

Once a basic understanding of the available functionality and hardware limitations was reached, a new Unity Project was created in order to re-implement the body-tracking code from the demos. By omitting unnecessary features and fitting third-party code to their own preferred management structure, the team developed a reliable, extensible framework to iterate with.

Modelling

Once the design was finalised, the team began building a high-res model around the block-out. Without worrying about polygon count, they modelled as many details as possible and made sure there were no perfectly sharp corners – something rarely found in real life.

Everything was modelled in Blender using the Subdivision surface method, along with the occasional Boolean modifier for cutting into curved surfaces.

While enabling photoreal smoothness and detailing, high-res models are too memory intensive to be rendered in real-time. Rather than omit detail, the team decided to use normal maps to transfer the high-res surface detail to a UV-unwrapped, low-res model. Normal maps are images which encode a surface direction vector in each pixel, allowing for the illusion of curvature and detailing across an otherwise jagged, low-res surface.

Materials

Based on image references of typical construction site machinery the team designed a collection of believable materials, including matching grime and wear. These were built in Substance Designer then brought into Substance Painter, along with the low-res model and its normal maps. By layering materials in a realistic fashion; base metal, then coloured paint, then cavity dust, it was possible to scratch away at successive layers, just as wear works in the real world.

Once the base colours, materials, and dirt were finalised, the team conceptualised some decals. These were translated and designed by Hitomi Iwasaki, and feature puns and conversational slogans to imply a friendly relationship between the mech and its user.

Rig Implementation

The model was rigged according to the Kinect's body specifications, then brought into Unity. The tracking was responsive and, with carefully tuned smoothing, had a good sense of weight.

The Kinect processed rig movements from the hips outwards. This presented some grounding issues, where the feet would float and slide during dramatic hip movements. The Unity asset used could mitigate the effect with soft constraints, but it couldn't eradicate it completely.

After some final testing and tweaking the team began writing scripts to monitor the rig's movements, including relative velocities, poses, and stops.

Sound Design

Using rig’s velocity and stomp data, the team were able to dynamically add sound to the user’s actions. This was initially set up and mixed in FMOD, then finalised and hard-coded in Pentagram’s own Unity-based audio system.

The sounds were recorded and produced by Obelisk Audio using Ableton Live. They created motor and piston sounds from bicycle pumps and small electrical appliances.

The ambient soundscape was created using a Teenage Engineering OP-1 and a Critter & Guitari Organelle, fed through Pure Data patches and Mutable Instruments Clouds. This was mixed with public domain field recordings from a construction site in Tokyo.

Gameplay

With the Mech up and running, the team began to implement more traditional gameplay elements for the user to learn and discover. It was immediately clear that any interaction had to be pose-based, or a side-effect of the mech's interaction with Unity's physics engine.

The team decided on a pose-activated 'Transform!' ability, where the user cycles through three hand-mounted tools by holding a heroic pose. Each tool remains activated for a set duration, then disappears. The tools were designed to require minimal interaction, but visually change the environment. The Laser can burn concrete and explode barrels, the Brick Gun shoots bricks across the environment, and the Attractor draws loose props towards it.

In a short time, the user can cause a satisfying mess, which remains throughout the duration of their session.

Partners

Jody Hudson-Powell

Luke Powell

Project Team

Mat Hill

Sabrina Maerky